In the past few years, Artificial Intelligence has stepped up its game dramatically, and as a result, gained tremendous traction amongst the general public. The tipping point of public interest came when ChatGPT, a text-based machine learning program developed by OpenAI, garnered attention in December 2022, though AI had been in development long before. Not long after its initial entrance into the World Wide Web, ChatGPT became the fastest website at the time to gain 100 million registered users in January 2023; however, it was surpassed by social media platform Threads in July of 2023. ChatGPT and its explosion in the mainstream market pointed towards a grand turning point in AI that would completely change the way society would approach tasks, especially writing.

Students are very familiar with these changes, as text-generating programs such as ChatGPT have been banned across the vast majority of schools in the United States. But that was early in 2023. What has been happening with AI since then, now that 2024 is upon us? Well…

Google’s answer to the AI craze of the last year, called Gemini, was put in some hot water towards the end of February as a result of its incapability of generating images with caucasian subjects. When prompted to generate an image of a Viking, for example, the image generated would generate a person of African-American or Asian descent. This was seen as problematic because it did not accurately represent the demographics of Vikings: nearly entirely caucasian.

Naturally, with a debacle of this nature that had gained significant exposure on social media, Google needed to respond, and they did. Frequently, AI image models are trained primarily or exclusively on caucasian male subjects and will tend to have errors when creating images that depict characters that do not fit this description. Google had tried to combat this with the Gemini model. They had admitted that “Gemini had been calibrated to show diverse people but had not adjusted for prompts where that would be inappropriate.”

Despite this Google-sized bombshell, this isn’t the biggest piece of controversy surrounding AI so far this year; In Feb. of this year, OpenAI revealed their latest endeavor: A video-producing model they dubbed “Sora.” It was released to a very small, select number of users in conjunction with this reveal. AI-created videos a year ago didn’t look very different from a colored flip book drawn by a five-year-old. Sora is now producing professional-looking videos that at first glance, are nearly indistinguishable from films taken by actual professionals. Unsurprisingly, this sudden development has been a cause of concern around the world.

Firstly, there is the ethical dilemma that comes with all AI-produced content on whether or not it counts as stolen content and the issue of whether or not it will make certain jobs irrelevant. Where Sora differs from other AI mediums, however, is that the tools and resources are present to completely wipe out an industry.

YouTubers Marques Brownlee and Matthew Judge, known online as DarkViperAU, both have noted that Sora could kill the entirety of the stock footage market. Companies like Storyblocks charge hundreds of dollars each year for users to have access to a large, though limited, array of stock footage to have at their disposal. These have been used for video creation for a very long time, and have almost become a staple of the online content sphere. Now, along comes a program that will generate just about anything you can describe in a matter of seconds, that is near inseparable from reality. It stands to reason as to why people are fearful of this possible outcome.

Further still, this technology has left people fearful of what the average person will do with the technology if it were to go public. Technology of this magnitude in the wrong hands could mean false criminal framings left and right. If technology of this magnitude is ready and usable at this point, other companies will follow suit, and there is an extremely large chance that one or more of those companies will not put the same barriers in place that OpenAI has with Sora. It will be of public access with no watermark to differentiate the real from the fake. It’s definitely a scary thought, so what is there to do?

There’s only one real, attainable solution to this dilemma: Remain vigilant. Anytime you see any form of media, look for imperfections. Look for anything that could indicate that it isn’t real. Truthfully, it is sad and disheartening that such a game-changing technology in the world only brings about concerns due to the foul nature of a select group of people. It is unfortunate that the only real solution to a problem like this is to investigate even the most normal of media for clues as to its creation, but it’s the way it will most likely play out.

Most likely, but not certain, because here’s the good news: we are not at this stage yet. Sora still has extremely limited access, and if the situation with Gemini has proved anything, it’s that even companies that have been working on programs like this for years and years are still coming up with a great deal of issues. The smaller companies that do not have the same concerns as OpenAI will have a very difficult time perfecting a program that even specialist companies cannot get exactly right. The future might look bleak at first glance, but remember all it took for us to get to this point; we’ve still got a ways to go.

![The Phoenix varsity volleyball team lines up for the national anthem. “We were more communicative [with each other] during this game, and I feel like we kept our energy up, especially after the first set,” senior Jessica Valdov said.](https://theblazerrhs.com/wp-content/uploads/2024/10/DSC_0202-1200x800.jpg)

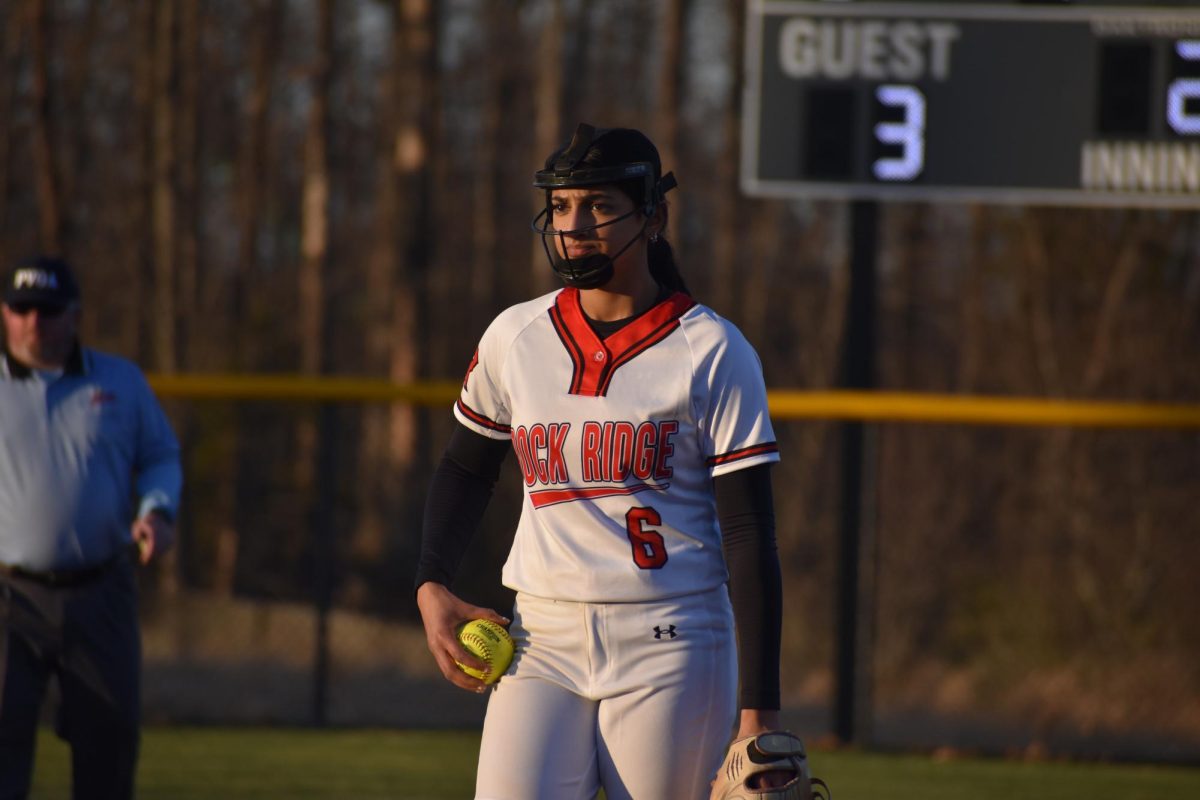

![Junior Alex Alkhal pitches the ball. “[I] just let it go and keep practicing so we can focus on our goal for the next game to get better as a team,” Alkhal said.](https://theblazerrhs.com/wp-content/uploads/2025/05/DSC_0013-1-1200x929.jpg)