March 11, 2020

Since the integration of technology-based learning in the 2018-2019 school year, nearly 85 thousand students have been subjected to surveillance by content moderation technology. Monitoring activity including homework, projects, essays, emails, artwork, chats, and even social media activity, students’ digital trails are monitored “24/7/365,” by Gaggle, a student surveillance service employed by LCPS and 1,400 other schools nationwide.

Gaggle markets itself as a one-stop solution to student safety in the digital world that supports “digital citizenship” and “creates positive school environments.” Promising “real-time content analysis,” the Bloomington, Ill. based company claims on their website that in the 2018-2019 school year, it helped “save 722 students from carrying out an act of suicide.”

Gaggle operates on a combination of machine-learning technology and human content moderation to alert school districts of potential threats of violence, substance use, suicide and self-harm, harassment, sexual content/pornography, and profanity. Gaggle scans communications against a “Blocked Words List,” which screens for predetermined words and phrases. Additionally, the service reviews content with an in-house, AI “Anti-Pornography Scanner.”

Available to three major educational services, Gaggle acts as a software plug-in for the Google G Suite for Education, Office 365, and Canvas. In LCPS, the software monitors the Google G Suite and Office 365.

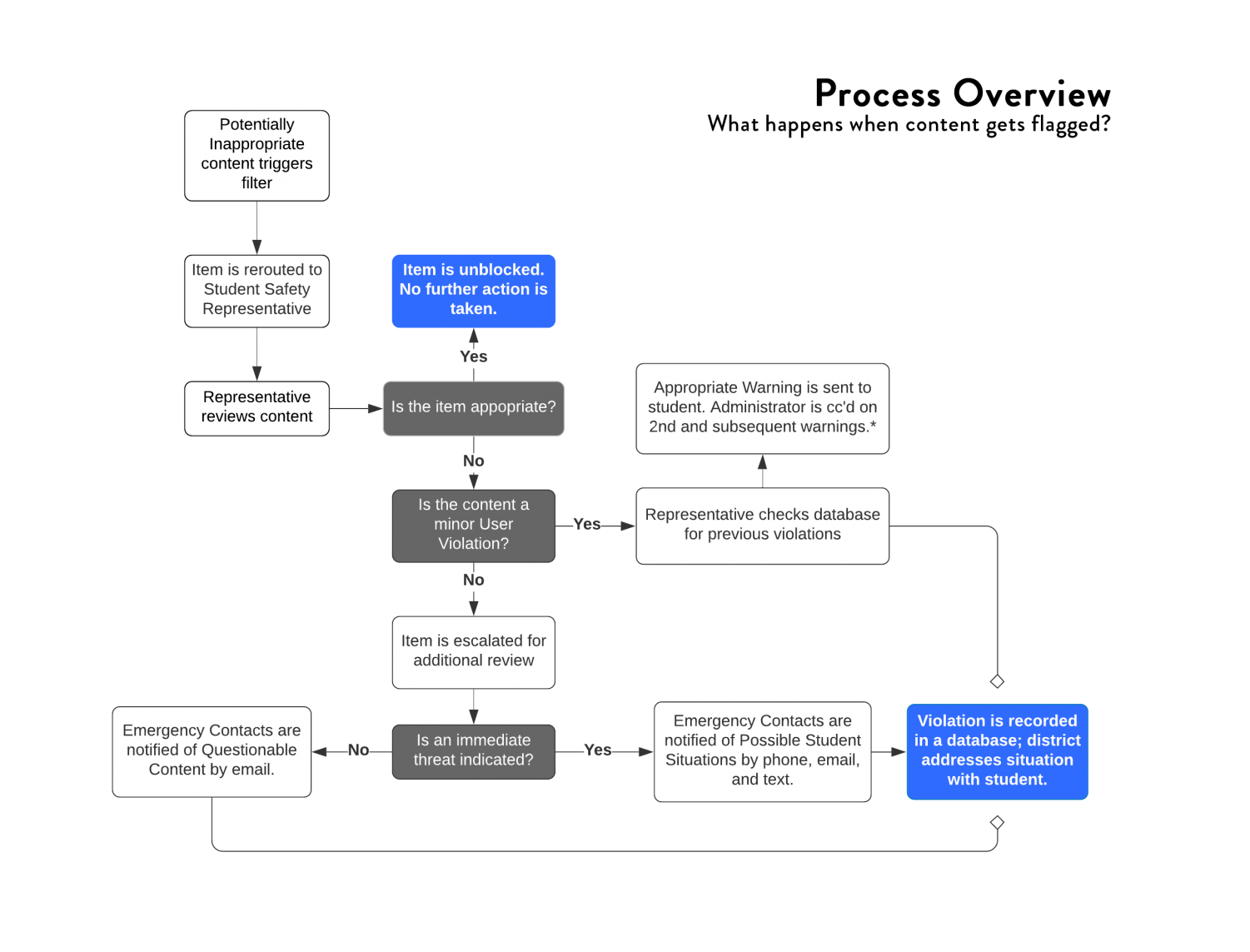

Gaggle files User Violations on a “three strikes” system and warrants different responses by school administrators depending on the severity of the violation. According to invoices, incident reports, and company documents aggregated from the county by the Freedom of Information Act and documents obtained previously by BuzzFeed News in a nationwide study of Gaggle, the response by Gaggle’s safety management team depends on whether the infraction is marked as a “User Violation,” “Questionable Content,” or “Possible Student Situation” by the company’s Safety Management Rubric.

After AI technology marks any form of violation, human moderators assess the urgency of the infraction to determine the appropriate response. According to Gaggle’s Safety Management Procedures, Safety Managers review over “a million blocked student communications” each month. In the event that the algorithm flags a false positive that includes inappropriate images/language for the purpose of schoolwork, human moderators unflag tagged work on a case-by-case basis.

Content marked by the “Violations” category could constitute profanity/vulgar language or suggestive/provocative content. Gaggle’s standard response for “Violations,” the lowest tier of offense by the rubric, constitutes an email to the student, with administration CC’d to the email following multiple offenses. However, according to documents provided to The Blaze, LCPS does not notify students in the event of a standard violation. Hugh McArthur, the Supervisor for Information Security for LCPS, said that Gaggle “doesn’t often use that function or recommend it anymore” and “it wasn’t [LCPS’s] goal when implementing the product” as its purpose was “student safety.”

“I didn’t even know it was called Gaggle. I just knew they had a ‘something’.” — Senior Nordina Taman

“Questionable Content” flags warrant an email from Gaggle representatives to “district specified contacts.” The QCON filter flags content that is serious but not an imminent threat. Gaggle responds to QCON incidents with an email to school representatives.

The most critical category, “Possible Student Situation,” is reserved for content that displays an “immediate threat” to student safety, and constitutes a phone call directly to school emergency contacts and, in some cases, law enforcement.

LCPS students are not notified by Gaggle when their content is flagged as a QCON or PSS violation by Gaggle guidelines. However, Gaggle maintains a record of all infractions and identifies repeat offenders on a “Top Concerns” chart on their User Dashboard.

Per incident reports provided by LCPS, during the 2018-2019 school year, Gaggle flagged 3096 PSS and QCON incidents, and over half of them were flagged on the basis of self-harm/suicide; 120 of the “Violence_Self” flags were marked as “Possible Student Situations.”

LCPS was unable to provide the “Blocked Words List,” saying in an email that the list was “proprietary” and not disclosed by the company. However, from incident reports disclosed by various Illinois school districts, BuzzFeed News compiled a list of words that had been flagged as QCON or PSS. In potential self-harm situations, Gaggle flagged “suicide,” “hate myself,” and various iterations of “end my life.” Gaggle also flagged LGBT terms like, “lesbian,” “queer,” and “gay” in the context of bullying and harassment. In general, profanities, specific drug and alcohol references, and language conducive to sexual content and sexual violence were marked as QCON violations.